Comparing results: automated vs manual accessibility testing

The accessibility requirements that will come into force from 28 June 2025 require service providers to assess the compliance of their digital environments with the European Union standard EN 301 549 V3.2.1. There are two options for evaluation: relatively resource-intensive manual testing versus attractive free automated testing tools. In this article, we'll explore the difference between automated and manual accessibility testing results.

Automated testing tools are useful for checking the accessibility of digital environments and provide a good starting point for identifying issues, but their capabilities are limited. High-quality software development plays an important role in accessibility, as our audits show that nearly 75% of accessibility issues stem from front-end code. Unfortunately, automated tests cannot detect all of these problems.

Automated tools are able to check whether some predetermined technical conditions are met (e.g., whether <input> is connected to <label>), but they can't see the big picture and can't understand the actual problems in the code (e.g. if the elements are grouped visually but not in the code).

What's more, automated tools can also bring out incorrect issues, highlighting problems that don't actually exist or, conversely, failing to identify real problems.

Accessibility is not just a technical requirement, but first and foremost a human-centred approach. Manual testing is necessary to understand how a web page or application actually performs for different users. Manual testing helps to uncover more complex issues and validate findings from automated tools.

Testing

Our aim was to find out the differences between the results of automated and manual accessibility testing. For testing, we chose the front page of an international public institution. We tested it with automated tools as well as manually. For automated testing, we used free browser extensions: WAVE Evaluation Tool, AXE DevTools, ARC Toolkit, and Siteimpove Accessibility Checker.

Manual testing for the desktop version was done using Chrome browser and JAWS screen reader, and for the mobile version on an iOS device with VoiceOver screen reader and an Android device with TalkBack screen reader.

Results

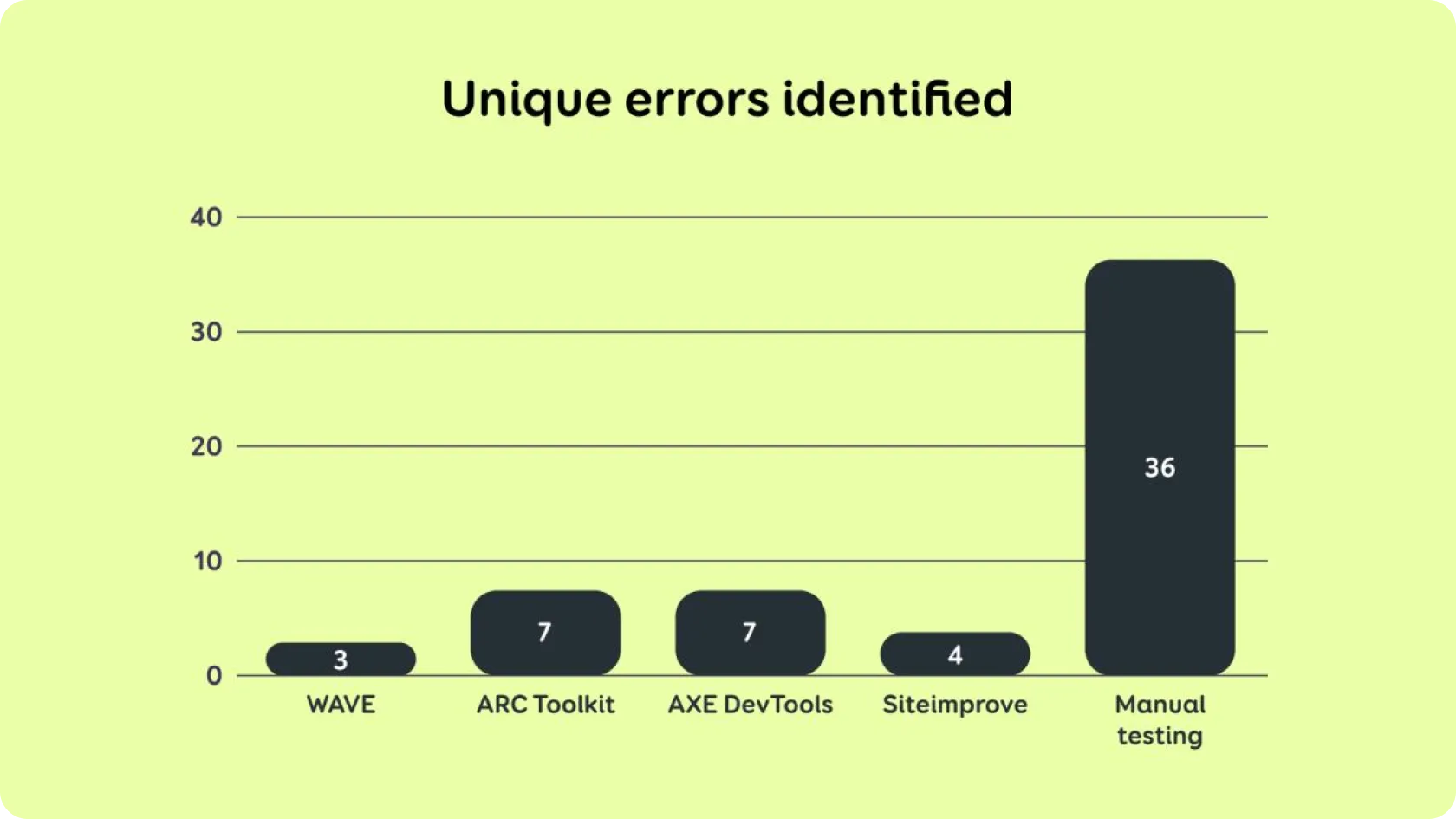

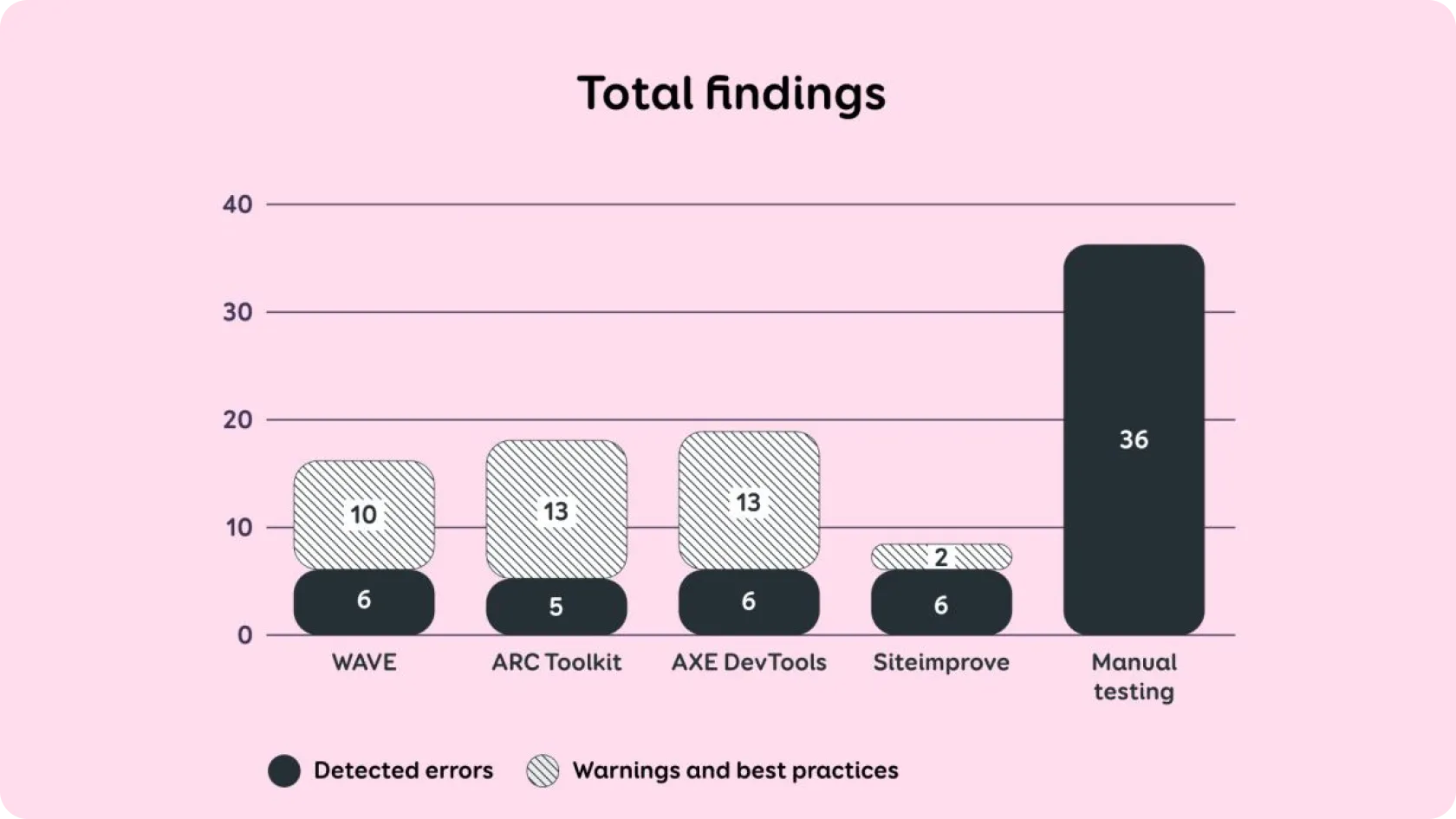

The summarised results in Figure 1 show that automated tools identified significantly fewer accessibility issues than manual testing.

However, the actual number of findings is even smaller, as most automated tools report the same error for each code element separately, which creates repetitions. In Table 1, where we display results by findings, we have grouped repetitive findings into one to make them more comparable to the results of manual testing.

Figure 1. Total errors found

For example, if the automatic tool pointed out four separate errors – one for each incorrect list element– then in Table 1 it is displayed as one error: "one list is read out by the screen reader as four separate lists".

Automated tools also highlight parsing errors, but these were not taken into account in manual testing, as in WCAG latest version 2.2, the "Parsing" criterion has been removed and does not need to be tested.

The results of different automated tools are also not fully comparable with each other, as each tool reports findings in a different way. Most automated tools also highlighted "warnings and best practices."

In these cases, it may be unclear whether it is a violation of an actual accessibility criterion or simply an opportunity to improve the user experience. If the manual test reported something as an issue, but automated tool put it under "warnings / best practices", we still marked it as “yes” in Table 1.

This shows that while automated tests bring up a variety of findings, they still require knowledge about accessibility and human revision to validate them. The following table shows the results of manual testing and whether automated tests found them or not.

Testing results